Why we need the activation functions in Neural Networks?

- October 03, 2018

- By Pawan Prasad

- 0 Comments

Neural Network is a mathematical model that mimics the functionality of an actual biological neuron. It has the ability to learn just like a biological neuron. It is composed of a large number of highly interconnected processing elements (neurons) working in unison to solve specific problems that it has learned from the dataset provided to the network. Each interconnected processing element (neurons) are capable of processing input information and connection between this neurons help in information signal flow.

To understand this suppose If I give you a typical age problem and in order to solve the problem you may come up with an equation that relates the input and output, this is called logical modeling where we use logic to find the relationship between input and output but in case of Neural Network just feed or train the model with a huge dataset with this relation the Neural Network will just find the logic or will learn the relation between input and output and it will find the pattern. The age problem is very simple if we scale to the more complex problem where we have many inputs and learning the pattern is no longer in the scope traditional logical modeling method. The Neural Network has upper hand when it comes to this cases. The Neural Network has the capability to learn the complex patterns.

|

| Fig 1 |

Why we need the activation functions in Neural Networks?

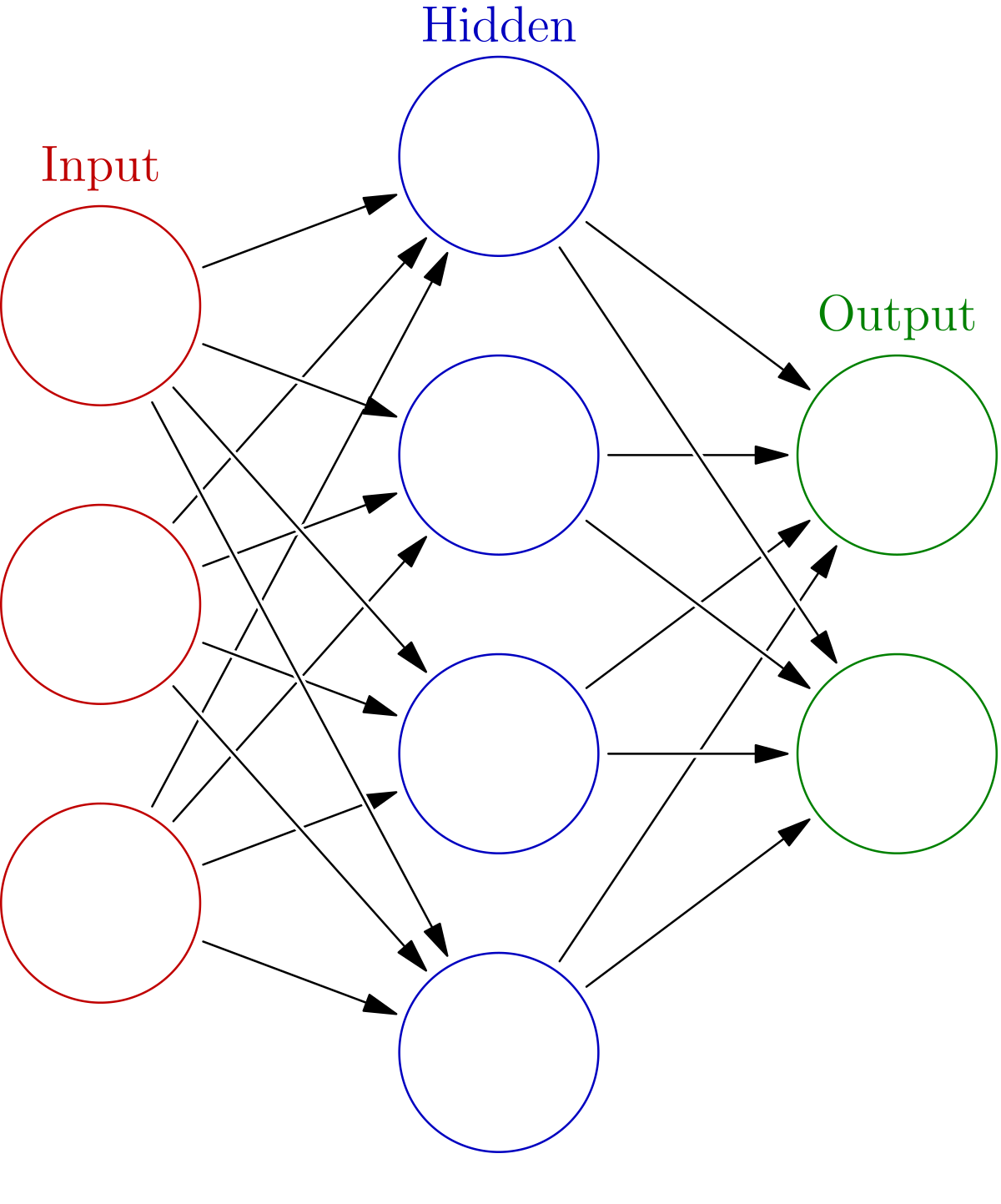

Why we need an activation function in Neural Networks? How our Neural Network is going to behave if we don't use activation function at all, we are going to answer these question in this post. I will try to come with as much clarity of the concepts as much I can and will try to explain by using mathematical equation.So we know Neural Network are Universal Function Approximators that means a Neural Network can learn or it can behave like any complex function if we feed the network with enough data. In short, it has the capability of learning complex function from the data itself. So there has to be a non-linear complex mapping between the input and output of the Neural Network which is achieved by using Non-Linear activation between the layers of the Networks. otherwise, the network reduces to a simple linear transformation system since the sequence of linear operations is a linear operation itself. Let us understand with the below equation.

Consider the Neural Network in fig 1 which as one hidden layer

X = input to the network

W = weight in the layers

B = bias

In equation (a),(b) we have used activation function which is denoted by g. From equation (1) to (4) we have derived some equation that would study how the output is affected when no activation function is applied in the network. In equation (1), the activation is input times(X) weight W of layer 1 and bias B of layer 1, then this signal is used as input to layer 2 activations(from fig 1 we say that the activation in layer 2 is the output of the network). Now the output of layer 1 will be input to layer 2. From equation (3) and (4) we can see that output is just linear dependent on the input X. The network linear transforming the input. The output will be a linear transformation of input, there will be a linear mapping between outputs and inputs and our Neural Network will learn only linear function.

So we can come to the conclusion that we use activation function to introduce non-linearity in the network so it can learn complex non-linear function.

0 comments